Senior Technical Architect @ Blue Ripples Technologies

We have deployed a monitoring application on the Linode Kubernetes Engine, utilizing Azure Pipelines for the deployment process. In this article, I will outline the details of the Kubernetes deployment for the various components we have used.

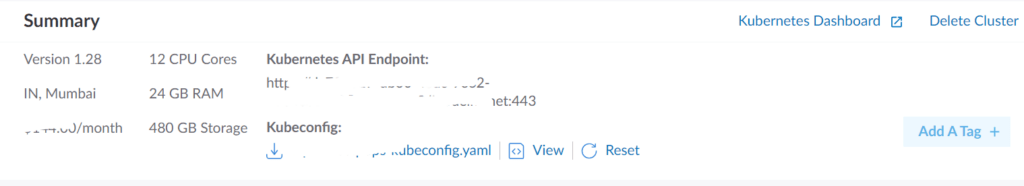

We have used linode cloud for setting up kubernets cluster using LKE. Setting up a kubernetes in linode is straight forward. The documentaion is avalable in the following link

We had set up a 3 node cluster with 8GB RAM each.

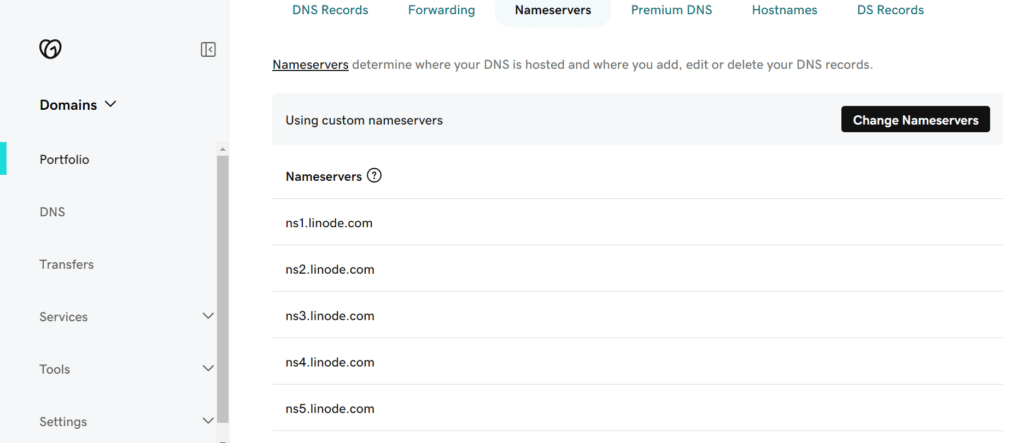

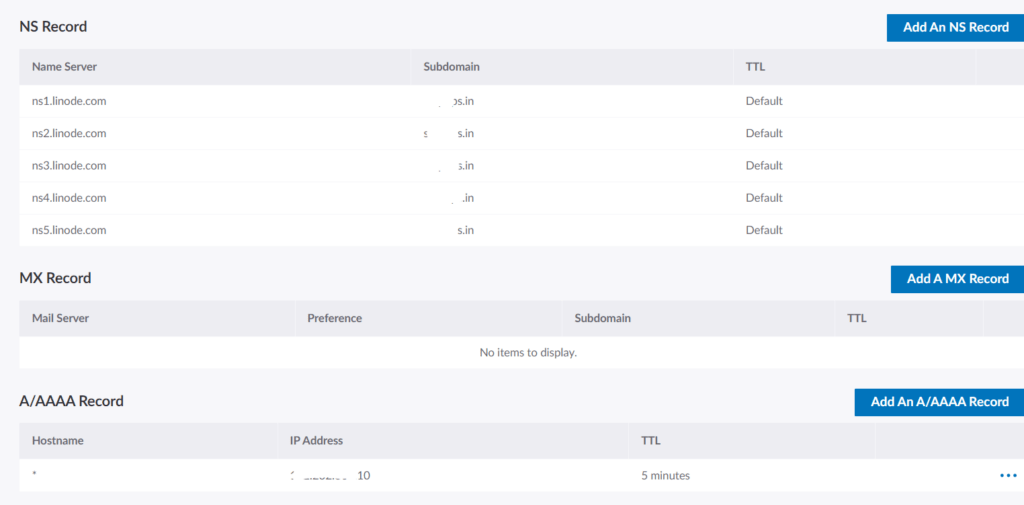

In next step, we created a domain mapping inside linode cloud. We purchased a domian from GoDaddy and map it in linode cloud. To configure a domain with linode , need to setup DNS namesever mappings with the following entries.

To configure the mapping in linode cloud we need to setup the domain with the following configurations. We need to add NS records and A/AAAA records in linode to route the domain into our LKE cluster.

Now, all our initial configurations are completed. In next steps we need to deploy relevent components/services in our LKE cluster. In the following sections I am explaining the approches that we have followed to deploy the various components in our LKE cluster.

For managing container artifacts we had set up a docker registry inside our kuberenetes cluster.For managing the storage of container artifacts we used linode object storage. We have created a docker registry inside our LKE cluster using the steps deescribed in the link : https://www.linode.com/docs/guides/how-to-setup-a-private-docker-registry-with-lke-and-object-storage

We have created an ingress resource to access the registry using a domain url.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

meta.helm.sh/release-name: docker-registry

meta.helm.sh/release-namespace: default

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

generation: 2

labels:

app: docker-registry

app.kubernetes.io/managed-by: Helm

chart: docker-registry-2.2.2

heritage: Helm

release: docker-registry

name: docker-registry

namespace: default

spec:

ingressClassName: nginx

rules:

- host: registry.xxxxx.in

http:

paths:

- backend:

service:

name: docker-registry

port:

number: 5000

path: /

pathType: Prefix

tls:

- hosts:

- registry.xxxxxs.in

secretName: letsencrypt-secret-prod We used Apache APISIX as our API gateway. Apache APISIX provides rich traffic management features like Load Balancing, Dynamic Upstream, Canary Release, Circuit Breaking, Authentication, Observability, etc. APISIX provides a rich set of plugins for integrations by default. APISIX also provides the flexibility to write and integrate custom plugins. Lua is the default language to write plugins , but users can also write external Plugins in programming languages like Java, Python, and Go.

We configured Apache APISIX using helm chart(https://apisix.apache.org/blog/2021/12/15/deploy-apisix-in-kubernetes).

The following components will be deployed as part of the installation.

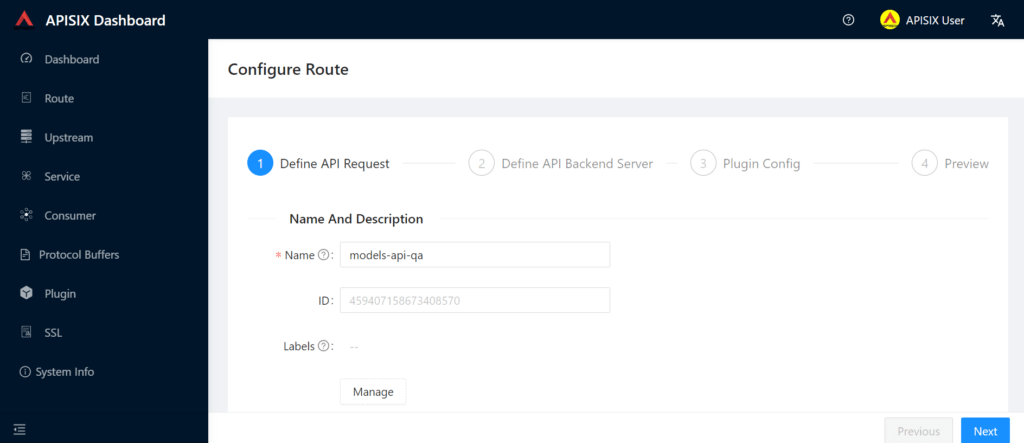

We have also configued APISIX admin dashboard which provides a basic UI for managing routes and upstream configurations. This can be deployed using the helm chart (https://apisix.apache.org/docs/helm-chart/apisix-dashboard)

We have configured ingress for dev and qa deployments in a single API gateway. We used a single API gateway for QA , DEV and other deployments, all API requests will be routed to the same API gateway. The following configuration is used to set up ingress for APISIX gateway in our deployment.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

labels:

name: gateway-api

namespace: default

spec:

ingressClassName: nginx

rules:

- host: api.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: qa-api.xxxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: demo-api.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: dev-api.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: web1.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: web2.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: xxxx-dev-api.xxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

- host: xxxx-api.xxxxxx.in

http:

paths:

- backend:

service:

name: apisix-gateway

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- api.xxxxxx.in

secretName: xxxxxx-api-secret

- hosts:

- qa-api.xxxxxx.in

secretName: xxxxxx-api-qa-secret

- hosts:

- demo-api.xxxxxx.in

secretName: xxxxxx-api-demo-secret

- hosts:

- dev-api.xxxxxx.in

secretName: xxxxxx-api-dev-secret

- hosts:

- web1.xxxxxx.in

secretName: xxxxxx-api-web-dev-secret

- hosts:

- web2.xxxxxx.in

secretName: xxxxxx-api-web2-dev-secret

- hosts:

- conworx-dev-api.xxxxxx.in

secretName: conworx-dev-api-secret

- hosts:

- xxxx-api.xxxxxx.in

secretName: xxxx-api-secret We have requirements to handle large time series data for which we are using timescaledb.

We are utilizing PostgreSQL as our relational database to manage configuration and GIS data(PostGIS). Additionally, we are leveraging TimescaleDB, an extension of PostgreSQL, within the same instance to handle our time-series data requirements.Servces inside the LKE cluster can communicate to the database using private IP.

As a side note, avoid using public IP addresses for internal communication, as it incurs additional data transfer costs.

To mange the requirments to store and access network models of energy distribution system we are using a graph database platform ArcadeDB.

ArcadeDB is a new generation of DBMS (DataBase Management System) that runs pretty much on every hardware/software configuration. ArcadeDB is multi-model, which means it can work with graphs, documents and other models of data.

We had set up ArcadeDB using the following configuration.

apiVersion: apps/v1

kind: StatefulSet

metadata:

creationTimestamp: "2023-08-21T13:28:18Z"

generation: 1

labels:

app: arcadedb

name: arcadedb

namespace: default

resourceVersion: "85790278"

uid: a60ae286-2db1-435c-bf19-07ac180b6be0

spec:

persistentVolumeClaimRetentionPolicy:

whenDeleted: Retain

whenScaled: Retain

podManagementPolicy: OrderedReady

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: arcadedb

serviceName: arcadedb

template:

metadata:

creationTimestamp: null

labels:

app: arcadedb

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- arcadedb

topologyKey: kubernetes.io/hostname

weight: 100

containers:

- command:

- bin/server.sh

- -Darcadedb.dumpConfigAtStartup=true

- -Darcadedb.server.name=${HOSTNAME}

- -Darcadedb.server.rootPassword=xxxxxxxxxx

- -Darcadedb.server.databaseDirectory=/mnt/data0/databases

- -Darcadedb.ha.enabled=false

- -Darcadedb.ha.replicationIncomingHost=0.0.0.0

- -Darcadedb.ha.serverList=arcadedb-0.arcadedb.default.svc.cluster.local:2424

- -Darcadedb.ha.k8s=true

- -Darcadedb.server.plugins=Postgres:com.arcadedb.postgres.PostgresProtocolPlugin

- -Darcadedb.ha.k8sSuffix=.arcadedb.default.svc.cluster.local

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

image: arcadedata/arcadedb:23.1.2

imagePullPolicy: IfNotPresent

name: arcadedb

ports:

- containerPort: 2480

name: http

protocol: TCP

- containerPort: 2424

name: rpc

protocol: TCP

resources:

limits:

cpu: 500m

requests:

cpu: 400m

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /mnt/data0

name: datadir

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

creationTimestamp: null

name: datadir

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

volumeMode: Filesystem We have also configured an ingres to access the arcadedb admin UI.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

labels:

name: arcade

name: arcade

namespace: default

resourceVersion: "81393565"

uid: a26970b8-c3db-4f81-b63e-66352ba2a335

spec:

ingressClassName: nginx

rules:

- host: graph.xxxxxx.in

http:

paths:

- backend:

service:

name: arcadedb

port:

number: 2480

path: /

pathType: Prefix

tls:

- hosts:

- graph.xxxxxx.in

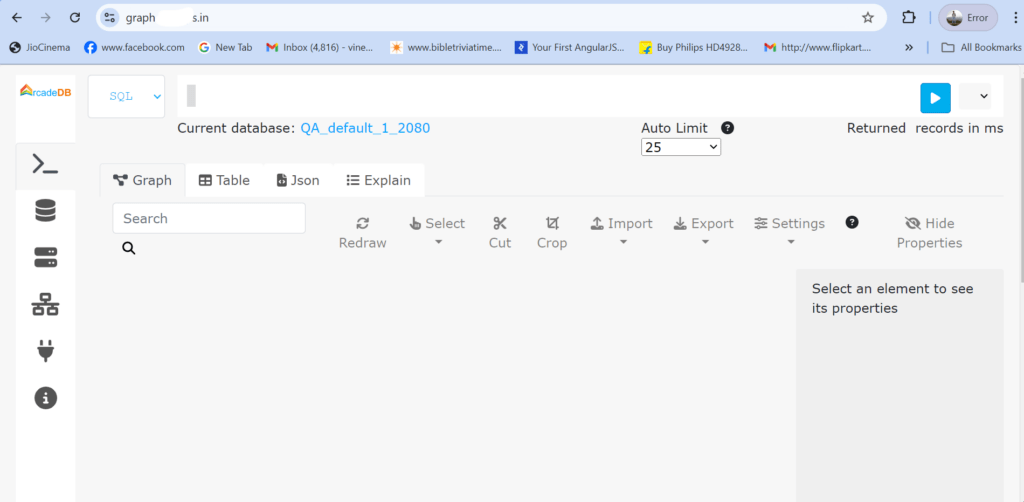

secretName: xxxxx-arcade-secret This will enable public access to the arcadedb UI as in the following picture

We used azure devops for source code repository, build pipelines and project management. Azure devops provides different integrations for build pipeline configurations including kubernetes connections, docker registry integrations and more.

We have developed applications including Quarkus, Springboot ,Python, Apache Spark and React.

Every application is deployed using azure pipeline.